My desire to draft this post was inspired by a recent pic from X, former Twitter.

Storage Pool 2 (Damaged)

My career is over.

I set up RAID 10 for four HHD disks, but two of them became faulty within two hours. They have persisted my code for the past seven or eight years.

Tomorrow, I am going to try some enterprise-level data recovery services. When I saw DAMAGED, my heart stopped.

I bought two WD disks and two Seagate disks from different batches on purpose, but they still crashed simultaneously.

I am using this chance to note down my practices of home data backup and redundancy against what I balance with privacy.

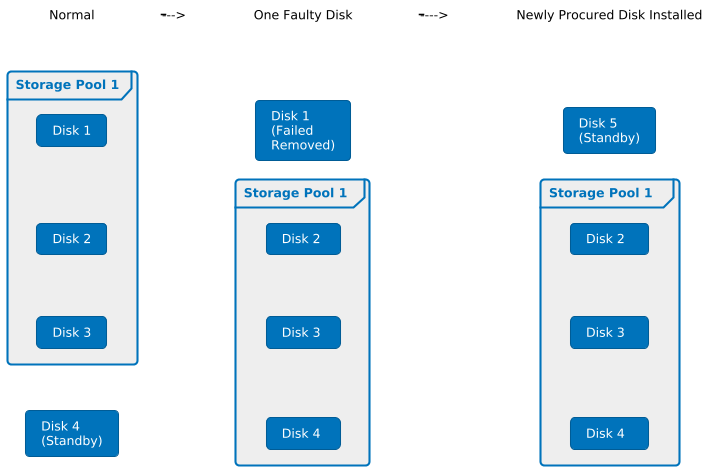

Let me start with this pic above. As a long-term user of NAS, I also encountered a situation where two disks were faulty nearly at the same time. At that moment, I just started to use NAS, so my dependency on NAS was pretty weak. Once I found one of the disks got down, I directly shut NAS down and ordered a new disk. Less than half an hour after I replaced the faulty disk with the newly procured one, another disk got down as well. So, if I had not shut the NAS down previously, I would have faced data loss.

As my dependency on the NAS got stronger, I found that it was tough for me to shut the NAS down when there was a faulty disk. Not to mention that the delivery in Singapore is much slower than in China, and waiting for one week for a new disk is not rare, so I was thinking of how to reduce the concurrence of two faulty disks without shutting the NAS down.

When one disk gets down, besides shutting the NAS down, lowering the disk IO, etc, reducing the concurrence of the failure of the second disk is not an easy thing. So, from my perspective, it is more realistic to reduce the failure duration of the first disk by setting up a standby disk.

How to Reduce the Risk of Double Faulty Disks for NAS: Standby Disk

The NAS I always use is 4-bay, but I only use three of the four disks to create a storage pool with Synology Hybrid RAID (SHR) enabled. The fourth disk is a standby disk instead of part of the storage pool.

- When a faulty disk is found in the storage pool, I will immediately replace it with the standby disk and remove it from the NAS.

- I will order a new disk. After I receive it, I will install it in the NAS as a standby disk. This will maximize the reduction of the failure duration of the first disk.

- But if another disk fails when the newly procured disk is on the way, I will still shut the NAS down as well.

If something happens often enough, it will be inevitable regardless of its low probability.

Anything that can go wrong will go wrong.

Murphy’s Law

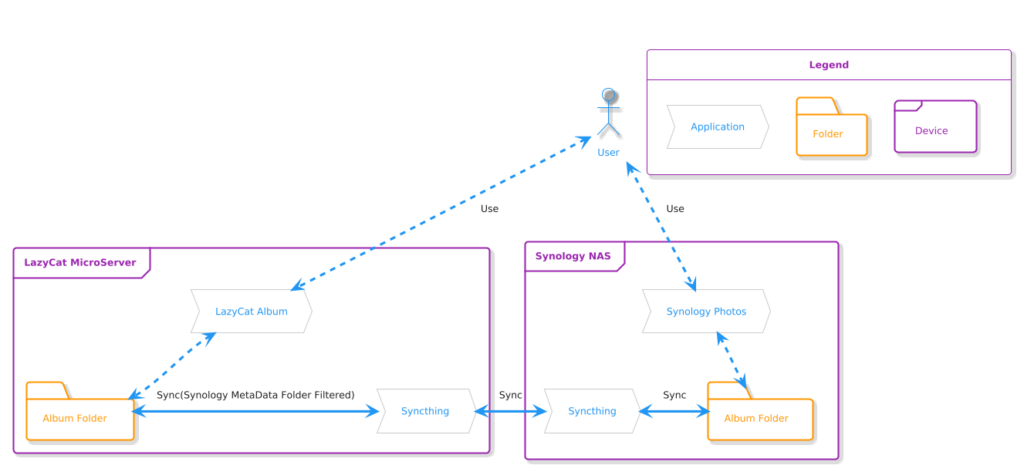

Obviously, putting eggs into one basket, NAS, is not reliable; after all, RAID is not data backup but data redundancy. As I mentioned in my previous post, Unconventional Usage of LazyCat MicroServer, I still rely on LazyCat MicroServer to backup some important data, such as photos, as shown in the following figure:

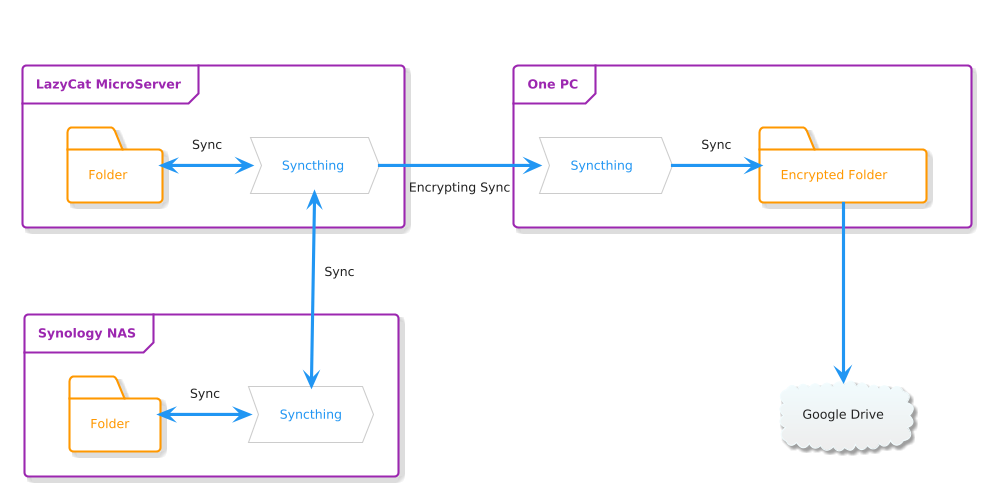

In fact, for such important data as photos, this is still far from enough. After all, the NAS and LazyCat MicroServer are both at home and strictly speaking, this does not count as off-site disaster recovery. So, I also use cloud storage to build an accepted version of 3 data centers in 2 locations 😂.

Accepted Version of “3DC in 2LOC”

I did a bit of simple thinking before confirming the implementation plan:

- Family photos, in my opinion, are extremely private data, so I have always hesitated to store them in cloud storage. Considering the limitation of local storage regarding data security, I had to compromise and accept uploading encrypted photos to the cloud. Ultimately, I preferred Syncthing because it supports encrypting the data before syncing.

- Cloud storage should offer high guarantees regarding privacy protection, compatibility, and stability, so I ultimately chose Google Drive.

Thus, the plan was confirmed.

Of course, it is impossible to protect all the data with this implementation. Also, from the perspective of data backup itself, it’s impossible to achieve comprehensive coverage, and cost control also needs to be considered. Please skip the next section for guys who do not care about cost. 😂

Data Classification

Before proceeding with data backup and redundancy, it is necessary to classify the data first.

I classified all the data into four levels:

- Level 1: Data should remain regardless of the existence of me

- Level 2: Data should remain when I exist

- Level 3: Better not lose the data

- Level 4: Not important

Additionally, I also classified the data into another three levels based on the privacy requirements:

- Level 1: Absolute privacy

- Level 2: Not suitable to disclose in the public domain

- Level 3: Can be displayed in the public domain

Based on the classification above, a simple scheme matrix has been drafted.

| Persistence Level | Privacy Level | Example | Plan | Misc |

|---|---|---|---|---|

| Level 1 | Level 1 | Private projects and related data | NAS + LazyCat + Google Drive (Encoded) | Accepted version of “3DC in 2LOC” |

| Level 2 | Non-private contracts, documents, etc. | NAS + LazyCat + Google Drive | ||

| Level 3 | NA | |||

| Level 2 | Level 1 | NA | ||

| Level 2 | Non-private contracts, documents, etc. | GitHub Private Repo + Google Drive | Dual cloud | |

| Level 3 | Open-source projects and related data | GitHub Public Repo + Google Drive | Dual cloud | |

| Level 3 | Level 1 | Purchased digital media | NAS + LazyCat | – Dual local storage – Privacy due to copyright |

| Level 2 | NA | |||

| Level 3 | NA | |||

| Level 4 | Level 1 | Insignificant documents and data scattering across various devices | NA | |

| Level 2 | Insignificant documents and data scattering across various devices and clouds | |||

| Level 3 | Insignificant documents and data scattering across various devices and clouds | |||

The dual-cloud solution mentioned in the table above is too common to be elaborated on. The NAS + LazyCat solution is actually the same as the one mentioned in the post, Unconventional Usage of LazyCat MicroServer. That’s all for now.